Analyzing Reddit Comments with Python - FIRE Subreddit

Learn how to use Python's API and Plotly to analyze a popular subreddit's daily post over the past 100 days.

What is the average, median and max dollar amount that's referenced on a popular finance subreddit? This is the question I sought after.

I browse a few finance related subreddits and I was always curious to see how often a certain dollar amount is referenced in the comments. Instead of picking a few arbitrary numbers I decided to gather a few baseline statistics, like the average, median and max dollar amounts that are referenced in the comment section of posts.

For this scenario I pulled from the FIRE, /r/financialindependence subreddit using reddit's API. This subreddit has a daily post titled "Daily Discussion". I figured this would be fairly easy to pull and aggregate from, given the consistency of the daily post titles. In the future it would be interesting to see how these numbers compare with another finance subreddit like /r/personalfinance.

Authenticating

Reddit's API is super straightforward, I created a separate account solely for Reddit projects to keep things clean from my main account. Refer to their documentation to get your credentials.

These are the parameters you'll need to fill in:

#private creds here

reddit = praw.Reddit(

client_id=,

client_secret=,

user_agent=,

username=,

password=,

)

Subreddit Search

In my case, the daily FIRE posts have the exact same title. As such, I didn't have to do any tweaking to capture only daily discussion posts

- Choose subreddit

- Search posts within that subreddit. This will return an object of submission IDs matching the search

- Convert into a list of string values

subreddit = reddit.subreddit("financialindependence")

submissions = subreddit.search(

"Daily FI discussion thread", limit=100

) # returns submission ids matching this, it's an object(iterator)

submission_list = list(submissions) # list of string values for each daily id

Creating a Submission Instance

The prior step just gathered the IDs of each submission, now we need to instantiate each submission to gather the metadata associated with each submission.

submission_classes = []

for x in submission_list:

submission_classes.append(reddit.submission(

x))

Grabbing Comments

Now we need to loop through each submission and grab the comment body in each submission.

- Loop through each submission

- Loop through each comment in the comment body of each submission

This line below comments.replace.more will dictate how far down the comment forest the API needs to crawl. With a limit set to None, this took hours to run. I chose to set the limit at 0 which should just gather the top level comments.

for submissionx in submission_classes:

submissionx.comments.replace_more(limit=None)

sub_title = submissionx.title

Given my goal, I only need comments that contain a USD currency symbol to extract my needed data points. To do that I used a regular expression matching '$' anywhere in the comment:

for comment in submissionx.comments.list():

comment_list.append(comment.body.encode(

"utf-8"))

Normalizing Comments

Of course there are many ways someone can write money. Such as:

- $20k

- $20k-50k

- $1M

- $1,353

- $50k/year

- To normalize these comments I first replaced each common shorthand with the respective number of 0s using a dictionary:

replace_dict = {

'k': '000',

'K': '000',

'M': '000000',

'm': '000000',

'million': '000000',

'Million': '000000',

',': ''

}

#If dollar matches isn't blank, regex matched on a dollar comment

if dollar_matches:

for key, value in replace_dict.items():

dollar_matches = [w.replace(key, value) for w in dollar_matches]

for item in dollar_matches:

comment_list.append(item)

- The second biggest normalization was to isolate ranges and 'per' comments such as $50k/year. To do that, I used another regular expression to extract the maximum numerical value in a comment. This does reduce the accuracy if a comment happens to have two separate dollar references but I felt the reduction in noise was better than a few missed numbers.

normalized_dollars_per_submission = []

for x in comment_list:

list_to_int = list(map(int, re.findall(r"\d+", x)))

normalized_dollars_per_submission.append(max(list_to_int))

As an example, '$50K/year' will be normalized to '50000/year' and then extracted as just '50000' through this process.

Statistics and Writing to CSV

Lastly, gather a few baseline stats using the statistics library and write to a CSV.

average_amt = statistics.mean(all_normalized_comments)

max_amt = max(all_normalized_comments)

median_amt = statistics.median(all_normalized_comments)

ziplist = [average_amt,max_amt,median_amt]

csv_columns_second = [

'average', 'max', 'median'

]

csv_file = "fire_comments_aggregated.csv"

try:

with open(csv_file, 'w',newline="") as csvfile:

writer = csv.writer(csvfile)

writer.writerow(csv_columns_second)

writer.writerow(ziplist)

except IOError:

print("I/O error")

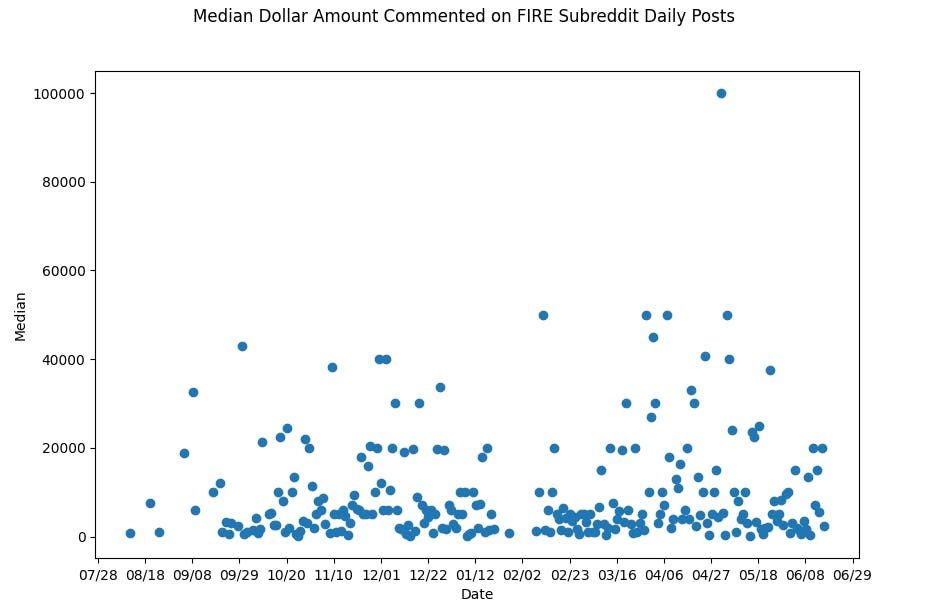

Plotting by Daily Post

This was an aside and not something I originally set out to do, mainly because this analysis doesn't provide many meaningful insights but it's interesting to look at nonetheless! Below is the median dollar amount referenced in all top level comments for the FIRE subreddit daily post, plotted by day for the past 250 days.

df = pd.read_csv('fire_comments.csv', parse_dates=['date'],

index_col=['date'])

fig, ax = plt.subplots(figsize=(10, 10))

plt.xlabel('Date')

plt.ylabel('Median')

plt.suptitle('Median Dollar Amount Commented on FIRE Subreddit Daily Posts')

plt.scatter(df.index.values, df.mediann)

ax.xaxis.set_major_locator(mdates.WeekdayLocator(interval=3))

ax.xaxis.set_major_formatter(DateFormatter("%m/%d"))

plt.show()

Aggregate Conclusion

The following statistics are from the top level normalized comments of the FIRE subreddit daily posts for the period: Aug 11th 2020 - June 16th 2021

| Statistic | Value |

| Average | $161,132,479,080 |

| Median | $5,000 |

| Max | $800,000,000,000,000 |